Project Video

CopterHack-2023, team Clover with Motion Capture System. Click logo for project video.

Table of Contents

- Team Information

- Educational Document

- Introduction

- Project Description

- Hardware

- Data Transfer

- Examples

- Trajectory Tracking

- Auto-Tuning

- Conclusion

Team Information

The list of team members:

Educational Document

My Gitbook, with detailed step by step analysis of the proposed project during the CopterHack 2023 competition can be found: MoCap Clover Gitbook.

This page gives a broad overview on the motivation and purpose behind this project, it also provides research and industry based knowledge around UAV application that the reader may find interesting. If the user is interested in the technical details and implementation then refer to the educational Gitbook document.

Introduction

Aerial robotics has become a common focus in research and industry over the past few decades. Many technical developments in research require a controlled test environment to isolate certain characteristics of the system for analysis. This typically takes place indoors to eliminate unwanted disturbances allowing results to be more predictable. Removing localization and pose feedback concerns can be accomplished with motion capture (MoCap) systems that track unmanned aerial vehicles (UAVs) pose with high precision as stated:

"OptiTrack’s drone and ground robot tracking systems consistently produce positional error less than 0.3mm and rotational error less than 0.05°" [reference].

This enables researchers to study the dynamics and behavior of UAVs in different environments, evaluate their performance, and develop advanced control algorithms for improved flight stability, autonomy, and safety. Research facilities around the world tend to built research drones from the ground up using off-the-shelf components with open source platforms such as PX4. While the end goal is the same: transferring pose feedback to the flight controller along with high level commands, the platforms and methods can vary significantly depending on factors such as onboard and offboard computing frameworks and data transfer methods. Many developers have a detailed background and understanding of the theoretical components of their research, however, adapting hardware configurations to their own platform such as sensor feedback and sensor fusion is not obvious. The purpose of this project is to provide detailed documentation on integrating the Clover platform with the MoCap system along with examples to familiarize users with the hardware, sensor fusion, high and low level controller development, and trajectory tracking.

Project Description

In this article, we will provide an overview of MoCap systems for tracking UAV pose in research applications, highlighting their significance, advantages, and potential impacts in the field of UAV controller development.

Document structure

The Motion Capture System educational document is divided into three main sections outside of the Introduction and Conclusion. Each section and its purpose is listed:

Hardware

The main goal in this section is to educate the reader on the MoCap system hardware and software. This can be further divided into several steps including camera placement, marker placement, and system calibration. A summary of the process is provided:

| Task | Description |

|---|---|

| Camera Placement | Position the motion capture cameras in strategic locations around the area where the UAV will be flying. The number of cameras and their placement will depend on the size of the area and the desired capture volume. Typically, cameras are placed on tripods or mounted on walls or ceilings at specific heights and angles to capture the UAV's movements from different perspectives. A simple 4-camera setup example is provided in the educational document. |

| Marker Placement | Attach OptiTrack markers to the UAV in specific locations. OptiTrack markers are small reflective spheres that are used as reference points for the motion capture system to track the UAV's position and movements. An example placement on the Clover is shown in the educational document. |

| System Calibration | Perform system calibration to establish the spatial relationship between the cameras and the markers. This involves capturing a calibration sequence, during which a known pattern or object is moved in the capture volume. The system uses this data to calculate the precise positions and orientations of the cameras and markers in 3D space, which is crucial for accurate motion capture. |

With these components completed correctly, you are well on your way to commanding indoor autonomous missions like this:

Overall, configuring a motion capture system for UAV research requires careful planning, precise marker placement, accurate system calibration, and thorough validation to ensure accurate and reliable data collection for your research purposes. For more information, refer to the informative documentation.

Data Transfer

With the data acquired from the MoCap system, the main goal in this section is to transfer it to the Raspberry Pi onboard the Clover and remap it to the flight controller/PX4 for control. A summary of the steps are listed:

- Data Acquisition: The motion capture system continuously tracks the position and orientation (pose) of the UAV using markers attached to the UAV and cameras positioned in the capture volume. The system calculates the 3D pose of the UAV in real-time and can be viewed through the motive software.

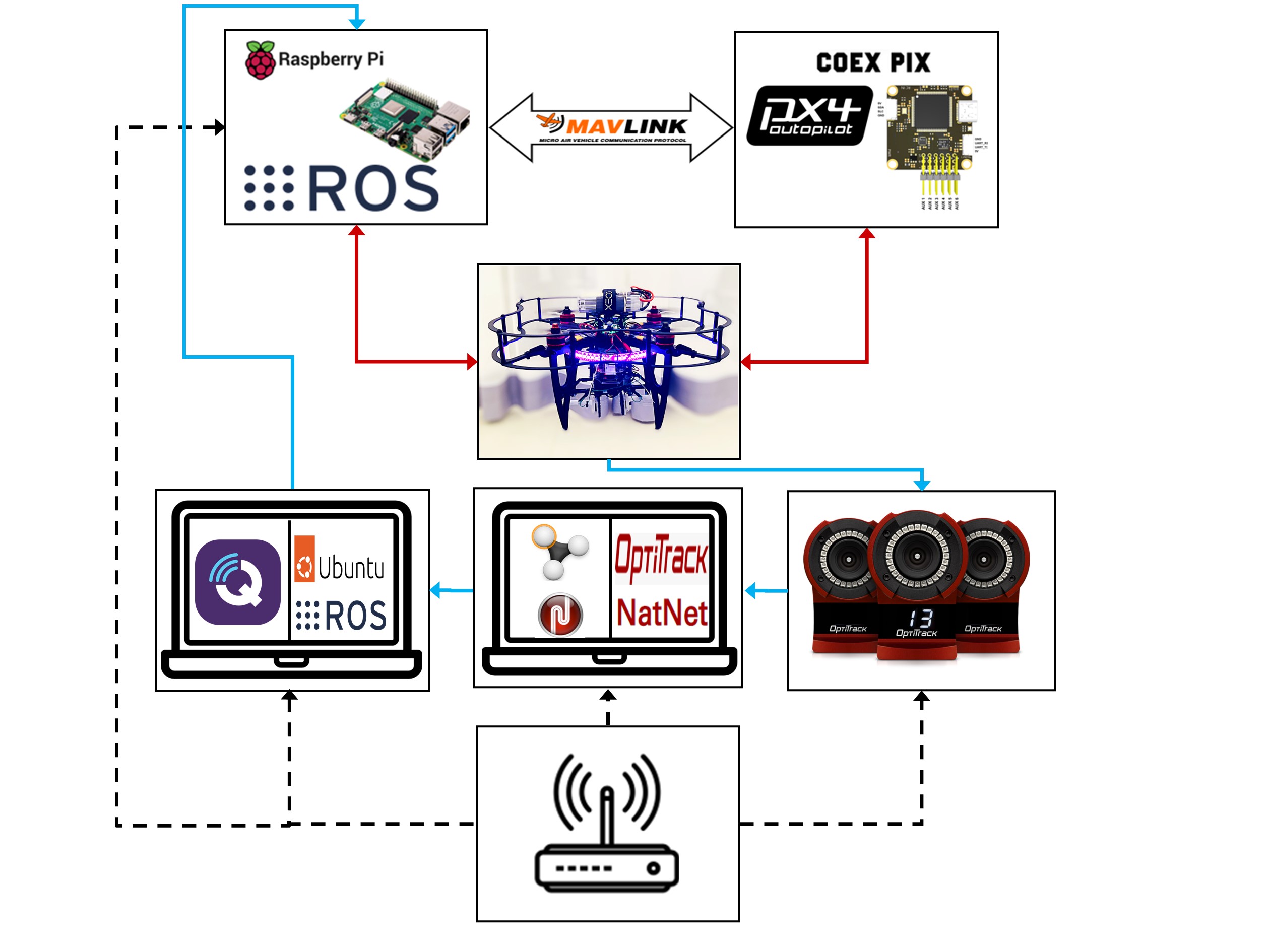

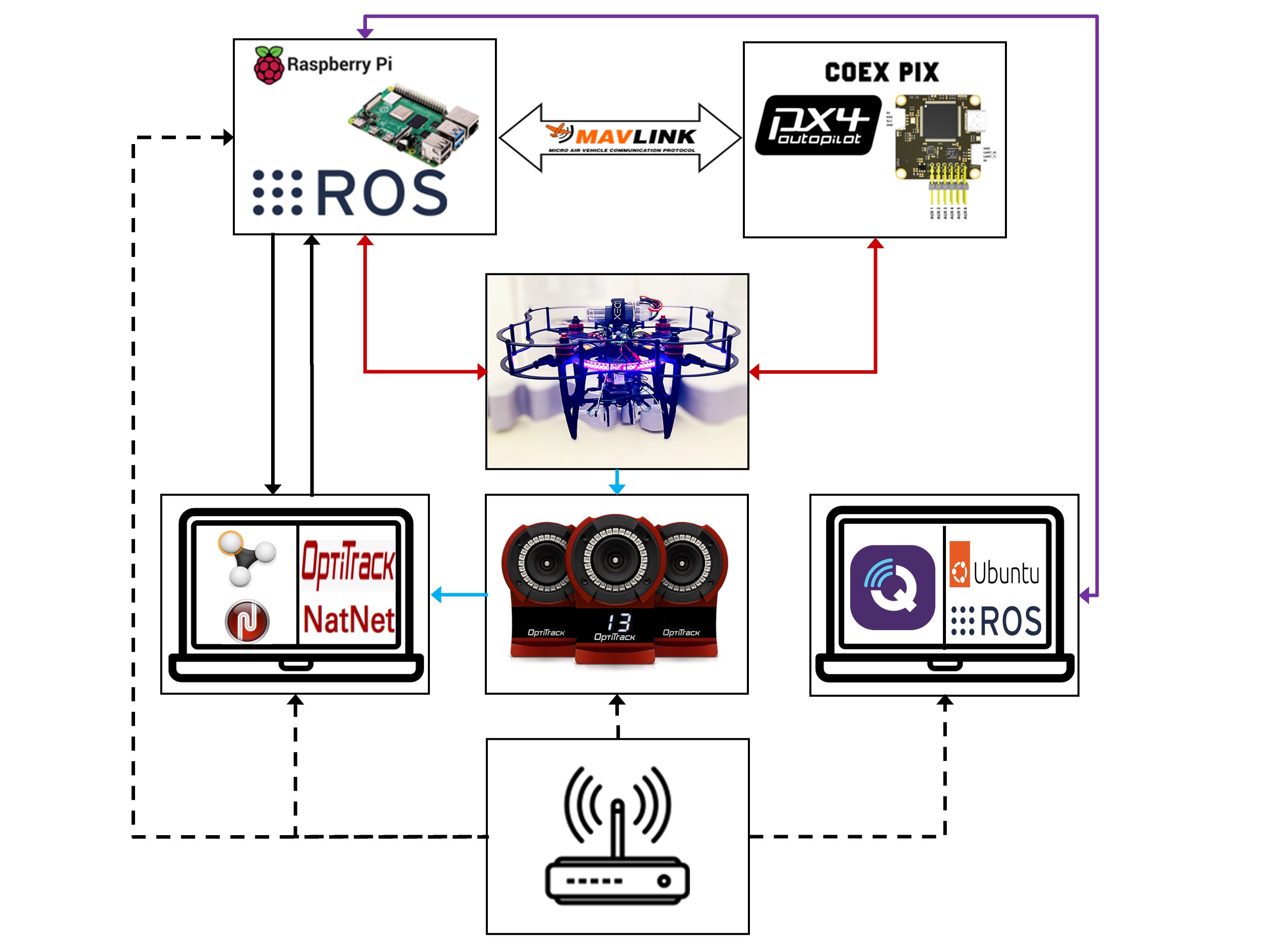

Data Transmission: The pose data is transmitted from the motion capture system to a Raspberry Pi using VRPN and a ROS network. While this works, I have implemented a strictly UDP data transmission method where highlighting the setup process and ease of use will be a future development, both configurations can be seen in the below figures. The Raspberry Pi acts as an intermediary for processing and relaying the data to the flight controller onboard the UAV using MAVROS. The connection can be established using USB or UART, I chose UART in my setups.

Fig.1(a) - Left figure: ROS network experimental setup topology. Legend: Black dotted line is the provided local network; Blue solid line is the Clover pose transmission where the final transmission from laptop to Pi is over a ROS network; Red line is hardware connections; MAVLink arrow is communication via a MAVLink protocol. .

Fig.1(a) - Left figure: ROS network experimental setup topology. Legend: Black dotted line is the provided local network; Blue solid line is the Clover pose transmission where the final transmission from laptop to Pi is over a ROS network; Red line is hardware connections; MAVLink arrow is communication via a MAVLink protocol. .

Fig.1(b) - Right figure: UDP transmission experimental setup topology. Legend: Black dotted line is the provided local network; Black solid line is the UDP client-server drone pose transmission; Light blue line is the pose data transmission; Red line is hardware connections; Purple line is communication via secure shell protocol and ROS network communication; MAVLink arrow is communication via a MAVLink protocol. .Data Processing: The Raspberry Pi receives the pose data from the motion capture system over a ROS network on a VRPN ROS topic, this was initially parsed from the sensor readings into position and attitude.

- Data Remapping: Once the pose data is processed, the Raspberry Pi maps it to the to a gateway/MAVROS topic sending it to the flight controller onboard the UAV. All coordinate transformations (ENU->NED) are taken care of with MAVROS.

- Flight Control Update: The flight controller onboard the UAV receives the remapped pose data and uses it to update the UAV's flight control algorithms. The updated pose information can be used to adjust the UAV's flight trajectory, orientation, or other control parameters to achieve the desired flight behavior or control objectives based on the motion capture system feedback.

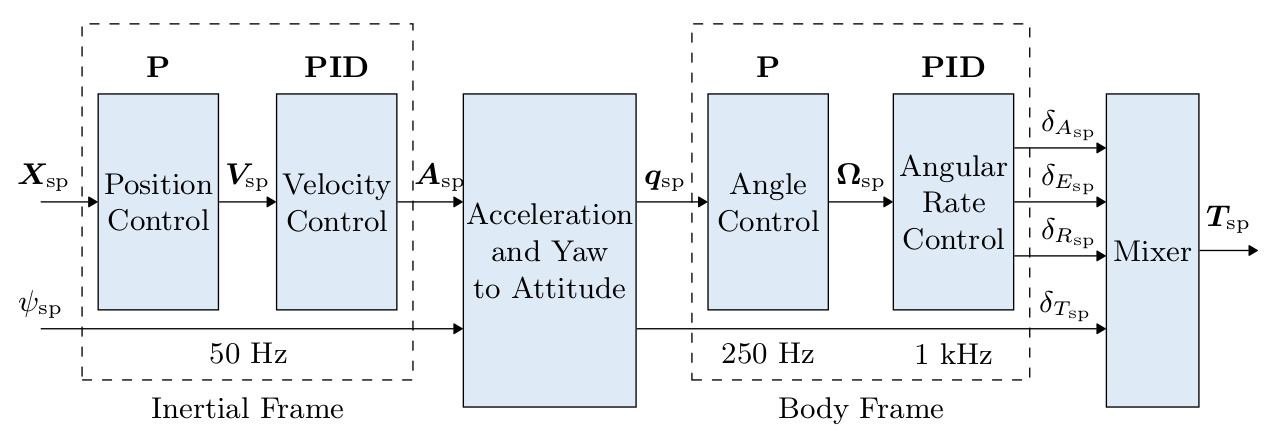

- Closed-Loop Control: The flight controller continuously receives pose feedback from the motion capture system via the Raspberry Pi, and uses it to update the UAV's flight control commands in a closed-loop fashion (PX4 uses a cascaded PID control system with more details provided in the educational document). This allows the UAV to maintain precise position and orientation control based on the real-time pose data provided by the motion capture system.

Overall, sending pose feedback from a motion capture system to a Raspberry Pi and remapping the data to the flight controller onboard a UAV involves acquiring, processing, and transmitting the pose data in a compatible format to enable real-time closed-loop control of the UAV based on the motion capture system's feedback.

Examples

This section provides two practical examples to help the user better understand the Clover platform, sensor fusion, UAV applications such as trajectory tracking, high level commands, and low level control. The reader will become familiar with an abundance of state-of-the-art open source UAV platforms/technologies such as:

| Platform | Description |

|---|---|

| PX4 | PX4 is an open-source flight control software for drones and other unmanned vehicles used on the Clover. It supports a wide range of platforms and sensors and is used in commercial and research applications. |

| Robot Operating System (ROS) | ROS is an open-source software framework for building robotic systems. It provides a set of libraries and tools for developing and managing robot software and is widely used in drone and robotics research. |

| MAVLink | MAVLink is a lightweight messaging protocol for communicating with unmanned systems. It is widely used in drone and robotics applications and provides a flexible and extensible communication framework. |

| QGroundControl (QGC) | QGC is an open-source ground control station software for drones and other unmanned vehicles. It provides a user-friendly interface for managing and monitoring drone flights and is widely used in commercial and research applications. |

- A figure-8 high-level trajectory generation: this example is outlined for both Software in the Loop (SITL) simulations and hardware testing with the Clover platform. Check out this interesting example from my trajectory tracking section!

Fig.2 - Lemniscate of Bernoulli [reference].

Here's a summary of the importance of trajectory tracking for UAV applications:

- Navigation and Path Planning: Trajectory tracking allows UAVs to follow pre-defined paths or trajectories, which is essential for tasks such as aerial mapping, surveying, inspection, and monitoring.

- Precision and Safety: Trajectory tracking enables precise control of the UAV's position, velocity, and orientation, which is crucial for maintaining safe and stable flight operations. Precise trajectory tracking allows UAVs to avoid obstacles, maintain safe distances from other objects or aircraft, and operate in confined or complex environments with high precision, reducing the risk of collisions or accidents.

- Autonomy and Scalability: Trajectory tracking enables UAV autonomy, allowing them to operate independently without constant operator intervention. This enables UAVs to perform repetitive or complex tasks autonomously, freeing up human operators to focus on higher-level decision-making or supervisory roles. Trajectory tracking also facilitates scalable operations, where multiple UAVs can follow coordinated trajectories to perform collaborative tasks, such as swarm operations or coordinated data collection.

- Flexibility and Adaptability: Trajectory tracking allows UAVs to adapt their flight paths or trajectories in real-time based on changing conditions or objectives. UAVs can dynamically adjust their trajectories to accommodate changes in environmental conditions, mission requirements, or operational constraints, allowing for flexible and adaptive operations in dynamic or unpredictable environments.

In summary, trajectory tracking is crucial for UAV applications as it enables precise navigation, safety, efficiency, autonomy, and scalability, while optimizing payload performance and adaptability to changing conditions. It plays a fundamental role in ensuring that UAVs can accomplish their missions effectively and safely, making it a critical component of UAV operations in various industries and domains.

- Clover adaptive auto-tuning: The second example shows the user how to implement the adaptive auto-tune module provided by PX4 to tune the low-level controllers or attitude control module. You can take a look into how this is accomplished with the Clover platform in the auto-tuning section.

Fig.3 - Cascaded PX4 control system [reference].

This is a much faster and easier way to tune a real drone and provides good tuning for most air frames. Manual tuning is recommended when auto-tuning dos not work, or when fine-tuning is essential. However, the process is tedious and not easy especially for users with limited control background and experience. The Clover airframe provides sufficient base settings where auto-tuning can further improve performance depending on the Clover being used.

Here's a summary of the importance of low-level controller performance for UAV applications:

- Flight Stability and Safety: The low-level controller, typically implemented as a PID (Proportional-Integral-Derivative) or similar control algorithm, governs the UAV's attitude and position control. Properly tuning the low-level controller ensures that the UAV remains stable during flight, with accurate and responsive control inputs. This is essential for safe and reliable UAV operations, as it helps prevent undesired oscillations, overshooting, or instability that can lead to crashes or accidents.

- Control Precision and Responsiveness: Accurate control is crucial for achieving precise and responsive UAV maneuvers, such as smooth trajectory tracking, precise hovering, or dynamic maneuvers. Proper tuning of the low-level controller allows for precise control of the UAV's attitude, position, and velocity, enabling it to accurately follow desired flight trajectories, respond to changing conditions or commands, and perform complex flight maneuvers with high precision.

- Adaptability and Robustness: UAV operations can be subject to varying environmental conditions, payload configurations, or operational requirements. Proper low-level controller tuning allows for adaptability and robustness, enabling the UAV to perform reliably and accurately across a wide range of conditions or mission requirements. Tuning the controller parameters can help account for changes in payload mass, wind conditions, or other external factors, ensuring stable and responsive flight performance.

In summary, low-level controller tuning is crucial for UAV applications as it directly affects flight stability, control precision, payload performance, energy efficiency, adaptability, and compliance with safety and regulatory requirements. It is an essential step in optimizing the performance and safety of UAV operations, ensuring reliable and effective flight control for various applications across different industries and domains.

Conclusion

Over the course of this project I was able to extend my knowledge in robotic applications while enduring many ups and downs along the way. This greatly helped me with my research when testing controller development was required. The motivation behind this documentation is to improve this experience for other researchers, robotic developers, or hobbyists that have a desire to learn fundamental robotic application which is beginning to shape the world we know today. These details can be explored in a GitBook for those who are interested.

I provided many details on the interworking components required to achieve an indoor autonomous flight setup with the COEX Clover platform. With an extensive background in UAV control, I tried to provide a basic understanding of this for the readers benefit. There are many more sections I would like to include along with improving upon the existing ones. A few examples include firmware testing with hardware in the loop simulations, advanced trajectory generation, and an extensive list of flight examples for the Gazebo simulator with application to hardware.

Lastly, I would like to thank the entire COEX team that made this project possible by providing a wonderful platform with support. I would like to give a special thanks to Oleg Kalachev for helping me debug and succeed through applied learning. With that being said, I hope you all enjoy the resourceful content provided, and I plan on releasing more detailed documents on other interesting topics as I progress through my research and development.